Creative Design & Development

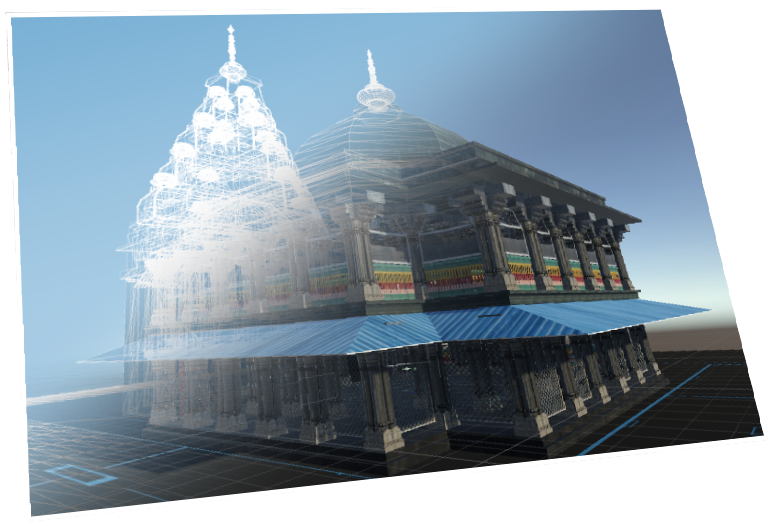

As a designer and developer for 15+ years, I’ve had the chance to work with all kinds of clients and colleagues on all kinds of projects. My areas of expertise include graphic design, frontend development, academic research projects, and education. My passion projects include 3D and VR development, game design, animation, and music.